How to configure RAID level 5 in Linux

Although Understanding concepts of RAID is very necessary, including configuration methods and explanation for a specific vendor based RAID controller is not advisable. Because different controller's from different companies use their own proprietry methods to configure hardware RAID.

So we have decided to include a thearitical study of RAID and configuration of different level's of software RAID in Linux. We have already included methods to configure Software raid level 0 & 1.

Read: Software Raid Level 0 configuration in linux

Read: Software Raid Level 1 in Linux

Read:Modify your swap space by configuring swap over LVM.

Read:How to create RAID on Loop Devices.

In this post we will be discussing the complete steps to configure raid level 5 in linux along with its commands.

In Raid level 5 data is striped across multiple disks. But the main advantage is that you can get redundancy in raid level 5 with the help of parity. Parity in hardware raid is calculated with the help of hardware xor gate's. Writing will be a bit slower in RAID level's with parity due to overhead involved in calculating the parity of data stripes across different disks.

So lets go through the steps to configure raid level 5 in linux.

- Create new partitions /dev/sda11,/dev/sda12 and /dev/sda13

- Change the type of partition to raid type.

- Save the change and update the table using partprobe.

- Create raid5 device using /dev/sda11, /dev/sda12 and /dev/sda13 by using mdadm command.

- Format the raid partition.

- View the updated Information of raid.

ATTENTION PLEASE: Instead of using three different hard disk i have used three different partitions only just to show how to configure software RAID 5 here. But if you want to use or implement RAID in Real Life you need three different Hard drive.

And also, more drives=Lower Redundancy so you can use even more hard disk to lower redundancy. Very soon i will write an article how to use raid 5 and in that article i will use three different hard drive which implementation will be same like production environment.

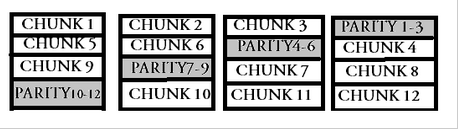

In the above shown diagram you can see that parity is distributed across multiple disks. So even if one disk fails the data in that disk can be recalculated with the help of parity in other disks.

So let's begin this tutorial by creating partitions (Which will be acting as a physical disk in software raid level 5)

[root@localhost ~]# fdisk /dev/sda The number of cylinders for this disk is set to 19457. There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with: 1) software that runs at boot time (e.g., old versions of LILO) 2) booting and partitioning software from other OSs (e.g., DOS FDISK, OS/2 FDISK) Command (m for help): n First cylinder (18934-19457, default 18934): Using default value 18934 Last cylinder or +size or +sizeM or +sizeK (18934-19457, default 19457): +100M Command (m for help): n First cylinder (18947-19457, default 18947): Using default value 18947 Last cylinder or +size or +sizeM or +sizeK (18947-19457, default 19457): +100M Command (m for help): n First cylinder (18960-19457, default 18960): Using default value 18960 Last cylinder or +size or +sizeM or +sizeK (18960-19457, default 19457): +100M Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@localhost ~]#

Next thing that we need to do after creating the partitions is to inform the linux system that these partitions will be used for raid. This is acheived by changing the partition types to RAID.

CHANGE THE TYPE OF PARTITION TO RAID TYPE:

[root@localhost ~]# fdisk /dev/sda The number of cylinders for this disk is set to 19457. There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with: 1) software that runs at boot time (e.g., old versions of LILO) 2) booting and partitioning software from other OSs (e.g., DOS FDISK, OS/2 FDISK) Command (m for help): t Partition number (1-13): 13 Hex code (type L to list codes): fd Changed system type of partition 13 to fd (Linux raid autodetect) Command (m for help): t Partition number (1-13): 12 Hex code (type L to list codes): fd Changed system type of partition 12 to fd (Linux raid autodetect) Command (m for help): t Partition number (1-13): 11 Hex code (type L to list codes): fd Changed system type of partition 11 to fd (Linux raid autodetect) Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@localhost ~]# partprobe

CREATE RAID 5 DEVICE:

[root@localhost ~]# mdadm --create /dev/md5 --level=5 --raid-devices=3 /dev/sda11 /dev/sda12 /dev/sda13 mdadm: array /dev/md5 started.

VIEW RAID DEVICE INFORMATION IN DETAIL:

[root@localhost ~]# mdadm --detail /dev/md5 /dev/md5: Version : 0.90 Creation Time : Tue Apr 9 17:22:18 2013 Raid Level : raid5 Array Size : 208640 (203.78 MiB 213.65 MB) Used Dev Size : 104320 (101.89 MiB 106.82 MB) Raid Devices : 3 Total Devices : 3 Preferred Minor : 5 Persistence : Superblock is persistent Update Time : Tue Apr 9 17:22:31 2013 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K UUID : d4e4533d:3b19751a:82304262:55747e53 Events : 0.2 Number Major Minor RaidDevice State 0 8 11 0 active sync /dev/sda11 1 8 12 1 active sync /dev/sda12 2 8 13 2 active sync /dev/sda13

[root@localhost ~]# cat /proc/mdstat Personalities : [raid0] [raid1] [raid6] [raid5] [raid4] md5 : active raid5 sda13[2] sda12[1] sda11[0] 208640 blocks level 5, 64k chunk, algorithm 2 [3/3] [UUU] md1 : active raid1 sda10[2](F) sda9[3](F) sda8[0] 104320 blocks [2/1] [U_] md0 : active raid0 sda7[1] sda6[0] 208640 blocks 64k chunks

format the raid device with ext3 journaling method:

[root@localhost ~]# mke2fs -j /dev/md5

MOUNT THE RAID FILE SYSTEM:

[root@localhost ~]# mkdir /raid5 [root@localhost ~]# mount /dev/md5 /raid5

PERMANENT MOUNTING:

To make the mounting exist even after reboot make entry in /etc/fstab file.

/dev/md5 /raid5 ext3 defaults 0 0

REMOVE A PARTITION /dev/sda13 FROM RAID DEVICE:

[root@localhost ~]# mdadm /dev/md5 --fail /dev/sda13 mdadm: set /dev/sda13 faulty in /dev/md5

[root@localhost ~]# mdadm --detail /dev/md5 /dev/md5: Version : 0.90 Creation Time : Tue Apr 9 17:22:18 2013 Raid Level : raid5 Array Size : 208640 (203.78 MiB 213.65 MB) Used Dev Size : 104320 (101.89 MiB 106.82 MB) Raid Devices : 3 Total Devices : 3 Preferred Minor : 5 Persistence : Superblock is persistent Update Time : Wed Apr 10 08:53:03 2013 State : clean, degraded Active Devices : 2 Working Devices : 2 Failed Devices : 1 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K UUID : d4e4533d:3b19751a:82304262:55747e53 Events : 0.4 Number Major Minor RaidDevice State 0 8 11 0 active sync /dev/sda11 1 8 12 1 active sync /dev/sda12 2 0 0 2 removed 3 8 13 - faulty spare /dev/sda13

now we can see that /dev/sda13 is now in faulty spare.

HOW TO ADD A PARTITION TO RAID?

[root@localhost ~]# mdadm /dev/md5 --add /dev/sda14 mdadm: added /dev/sda14

Above command will add partition /dev/sda14 to raid5 i.e /dev/raid5.

HOW TO VIEW OR CHECK WHETHER NEW PARTITION WAS ADDED OR NOT TO RAID?

[root@localhost ~]# mdadm --detail /dev/md5 Number Major Minor RaidDevice State 0 8 11 0 active sync /dev/sda11 1 8 12 1 active sync /dev/sda12 2 8 14 2 active sync /dev/sda14 3 8 13 - faulty spare /dev/sda13

Now you can clearly see that /dev/sda14 is successfully added to raid5 its showing active.

To see what happens with your raid devices in details you can use this command.

[root@localhost ~]# dmesg | grep -w md md: md driver 0.90.3 MAX_MD_DEVS=256, MD_SB_DISKS=27 md: bitmap version 4.39 md: Autodetecting RAID arrays. md: autorun ... md: considering sda14 ... md: adding sda14 ... md: adding sda13 ... md: adding sda12 ...

To get the information about a particular raid device you can use this command.

[root@localhost ~]# dmesg | grep -w md5 md: created md5 md5: WARNING: sda12 appears to be on the same physical disk as sda11. True md5: WARNING: sda13 appears to be on the same physical disk as sda12. True md5: WARNING: sda14 appears to be on the same physical disk as sda13. True raid5: allocated 3163kB for md5 raid5: raid level 5 set md5 active with 2 out of 3 devices, algorithm 2 EXT3 FS on md5, internal journal

If you have configured more than one raid in your machine and you want to know detail about all of them you can use below command.

[root@localhost ~]# mdadm --examine --scan ARRAY /dev/md0 level=raid0 num-devices=2 UUID=db877de6:0a0be5f2:c22d99c7:e07fda85 ARRAY /dev/md1 level=raid1 num-devices=2 UUID=034c85f3:60ce1191:3b61e8dc:55851162 ARRAY /dev/md5 level=raid5 num-devices=3 UUID=d4e4533d:3b19751a:82304262:55747e5

The above command showa you the all the raid configured in your machine along with the number disk devices each raid is using,It also shows the UUID of the parition

Read: What is UUID of a partition

KNOW THE ADVANTAGES OF RAID 5:

- READ Data Transaction rate of RAID 5 is very fast.

- RAID-5 have capacity to change the number of devices.

- It is also capable of changing the size of individual devices.

- RAID5 can also change the chunk size.

- It is also capable of changing the layout.

- WRITE Data Transaction rate is a bit slow because of the parity involved.

- You can get the redundancy without wasting any disk.

- We can also convert A 2-drive RAID5 to RAID1 & A 3 or more Drive RAID5 to RAID6.

- A 4-drive RAID5 can be easily converted to A 6-drive RAID6 if you have 4-drive.

ALSO HAVE A LOOK ON DISADVANTAGES OF RAID 5:

- Raid 5 is a little bit complex due to its configuration and parity

- Rebuilding takes time becuase of parity calculation involved in raid.

- if suppose one disk fails, the data from that disk is calculated with the help of data from other disk as well as parity from other disks, due to which reading becomes slow .

Understand in Brief About Software LINUX RAID Solutions:

RAID-0 Supports Striping.

RAID-1 supports MIRRORING.

RAID-4 and RAID-5 have Parity Support.

What do you mean by the term HOT SPARE?

A process in which a standby disk will get used if other disk fails is termed as HOT SPARE.

What do you mean by HOT SWAPPING in RAID?

Hot swap term is used when a disk can be changed in a running array.

Sarath Pillai

Sarath Pillai Satish Tiwary

Satish Tiwary

Comments

which is better?

Am building a media server with 3 2GB HD is RAID 5 better for me? and what terminal you using?

Hi Edd,Selection of raid

Hi Edd,

Selection of raid depends upon the use cases. If you want a storage solution that will be used for heavy read and write operations then Raid 10 will be a good choice, but that will be a little bit expensive, as you will end up using more disks.

is your configuration having 3 x 2 GB hard disks or you are going to configure software raid from different partition made inside a single 32GB HD. For real world applications software raid is not a good solution, and is even more bad if you are using software raid on partitions on single HD rather than different HD's.

Regards

Sarath

Configuration of RAID

I'm also try many times of how to add and configure RAID in linux but everytime failed, but now in this article, i'm getting much knowledge , so thanks for this

http://www.techora.net/

More Easy Way

is there any other easy way to configure RAID level 5 i have try alot of time but it's goes fail again n again Please tell me another way

http://dailypunch.pk/

Add new comment