How does indexing makes database faster

Most people ask why is database so faster when compared to flat file entries of data. There are many reasons behind that, one of them is indexing. Of course the speed of the underlying disk subsystem's also plays a major role in increasing database speed. Due to the heavy use of Relational database in almost all companies that employ heavy database, it has become very important to understand the internal working of the database, or i must say what factors makes a database efficient in terms of performance.

Let's get back to some computer basics before getting inside indexing in databases.

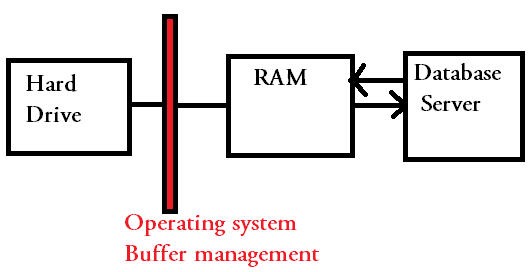

Recent advancement in computer hard disk's and storage has led to increased speed and performance of read and write operations. However volatile storage devices like RAM makes the faster read and write operations more seamless. Due to this reason the design of a computer operating system is made in such a way that data is never directly fetched from mechanical devices like hard disk's, but data is first transferred from hard disk to a faster device like RAM(volatile), from where applications and other programs directly fetch data on demand.

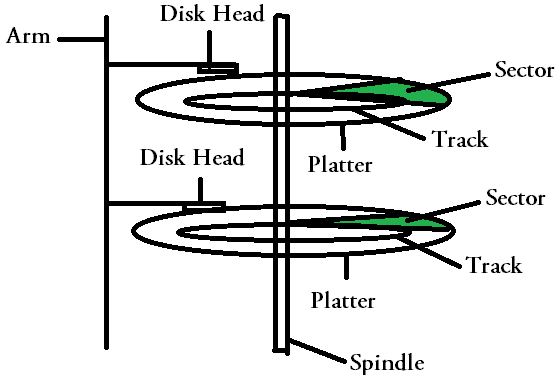

Different database engine's uses different strategies to store and retrieve data. We will begin this tutorial by understanding the idea behind hard disk, memory and then pages, because almost all databases has to inevitably use them in some or the other way. Hard disk's are sometimes referred to as secondary storage devices and data stored in hard disk's will persist across system reboots. Let's see how a hard disk internally look like.

In the above shown diagram, i have tried to depict the internal architecture of a mechanical hard drive. The "Platter's" are very much similar to a Compact disk in terms of appearance(but with a high storage space). There can be many platter's in a typical hard drive, but i have shown only two for this example.

Platter's are again striped by tracks, which again devided into sectors.(one platter contains multiple tracks)

The "Arm's" fetch data from different platter's, but to fetch data the "platter's" need to be rotated by the spindle. The speed of the "spindle" rotation is mentioned by most of the hard disk vendor's in terms of RPM(Rotations Per Minute.). For example, 7200 RPM & 15000 RPM.

The "disk head's" read's data from one sector at a time. So the "Arm" assembly needs to move the disk head's to and from the spindle, to read data from the appropirate sector.

Data present in the disk is always represented by the fixed size multiples of sector's. So if you want to access data from the hard drive the below steps are performed, which is the main source of performance overhead.

- Identify the correct track, where data is present & move the disk head to that track.

- Let the "spindle" rotate the platter, so that the correct sector comes beneath the "disk head". This process is also called as "seeking"

- Fetch the entire data from the beginning of the sector to the end of the sector.

Just imagine the overhead involved in retrieving data from a disk, especially if you want that data to be retrieved so fast. Due to this overhead involved, there is a primary volatile storage device used, which increases the performance of the data retrieval. The main reason behind the performance of this volatile storage called RAM is the fact that it has no mechanical moving parts like hard disks. Although they are high in performance, they are not used as permanent storage, because they are volatile(data is not persistent across reboots.)

Reading data from RAM is instantaneous. The process of transferring data from hard disk to RAM and back to hard disk from RAM is done by the operating system. In fact applications like database, makes use of this process with the help of a buffer management API provided by the operating system.

We already saw the overhead involved in fetching data from different sectors of a hard disk. But if the data is spread over contiguous(one after the other) sector's then it will improve the performance of fetching data. Because the spindle and head's does not require to move/rotate themselves, as data is available from sectors one after the other.

In order to improve performance the database server can always ask the operating system to arrange data in one after the other blocks.

"OPTIMIZE TABLE" in MySQL does the same thing with a particular table. On running the command the operating system will arrange the records from that table in blocks one after the other. This process is also sometimes called defragmenting. Defragment will improve the following things.

- Hard disk seek times gets reduced(as data is available from adjacent sectors)

- Wait time for the spindle to rotate

- Data fetching time

How does indexing in database makes its operations faster?

Let me take you through an example. Imagine that you are in a shop to purchase a book on TCP/IP. And you found one suitable book that contains all the information related to TCP/IP. The Shop keeper will never allow you to sit there and read the entire book!!. So what most of us do is to search for topics of our interest in that book.

How will you search a topic of your interest from a book for TCP/IP, that consists of 1000 pages. If you sit there and turn pages one by one, am sure that the shopkeeper will surely pat your back in some time. This is the main reason why authors implement "Table of content" or sometimes called an "Index". Most of the books do keep a couple of pages in the beginning reserved for Index. You can easily search your topic of interest from the table of contents, and directly reach the exact page number of your topic.

A database index is somewhat similar to this table of contents in a book. Indexing will help a data base query to be retrieved fast (Because the query does not require to go through the entire table to get the data, but it will find the data blocks from the index.).

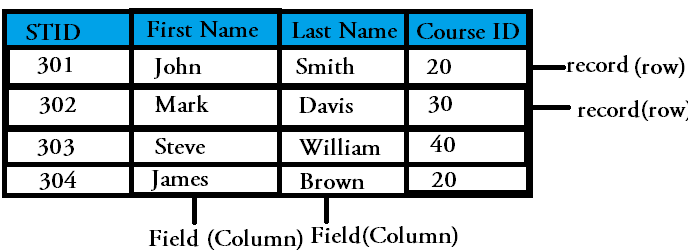

Lets get inside some more basics of a database table..

From the above shown example database table, you can clearly understand what is a record and what is a field. Suppose if the above database is a big one with 1 Lakh records which means each filed will have one lakh values.

Now if i want to search something out of that 1 lakh fields, a linear search will be take too long to complete, but what about a binary search(binary search is a fast searching algorithm, when you have a sorted list of values, either ascending or descending order.)

Read: What is Binary Search Algorithm

Imagine that we are using fixed length record in our above database(Which means all records will be of a fixed size.) So lets take an assumption of 204 bytes(this is only for simplicity in calculation) of record length. And imagine that our default block size is 1024 bytes. So we have the following.

Fixed Record Size = 204 bytes

Block Size = 1024 bytes

So Records Per Block = 1024/204 = 5 Records

And in the above example we took 1 lakh records as example, so..

1 Lakh/ 5 = 20000. So we require 20000 blocks for 1 Lakh records. So if you go by linear search you need to search through 20000 blocks to find a filed item. But if do a binary search then "log base 2 of 20000 is 14.287712". Which means you only need to go through sorted values 14 times to find a unique value of your interest using binary search.

Creating an index for any field in a table (creating an index of a unique value field in a table is a best method, because that field can be used to retrieve other fields.) will create another table containing sorted values of that field.

However being that said a large index of all fields in a table, will also cause a performance reduction. Imagine if you have an index as long a table, it will once again be a overhead to look through. Another important thing to keep in mind is that indexing will increase the query read performance, while it will reduce the write performance. Because if you change or alter a record, or say add a new entry in the database, it will do two write operation(one operation will write the record itself & the other operation will update the index). So below things must be kept in mind while making an index.

- Indexing each and every field in a table will reduce the write performance.

- Indexing a field with unique values in a table is advisable

- Fields that act as a join in relational database must be indexed, as they helps in complex queries across multiple tables.

- Indexing also uses disk space so be careful in selecting the fields you want to index

How to create an index for a field?

Lets create an index for a sample students database shown below.

mysql> select * from students; +------+--------+----------+ | stno | stname | courseid | +------+--------+----------+ | 301 | David | 20 | | 302 | Justin | 30 | | 303 | John | 20 | | 304 | Mark | 40 | | 305 | Joseph | 30 | | 306 | Martin | 40 | | 307 | James | 30 | +------+--------+----------+ 7 rows in set (0.00 sec)

Although that table is not that big to create an index, for showing this example index creation let's create an index for "stno" field.

mysql> CREATE INDEX id_index ON students (stno) USING BTREE; Query OK, 7 rows affected (0.11 sec) Records: 7 Duplicates: 0 Warnings: 0

Let's look at the contents of this index we just created.

mysql> show index from students; +----------+------------+----------+--------------+-------------+-----------+-------------+----------+--------+------+------------+---------+ | Table | Non_unique | Key_name | Seq_in_index | Column_name | Collation | Cardinality | Sub_part | Packed | Null | Index_type | Comment | +----------+------------+----------+--------------+-------------+-----------+-------------+----------+--------+------+------------+---------+ | students | 1 | id_index | 1 | stno | A | NULL | NULL | NULL | YES | BTREE | | +----------+------------+----------+--------------+-------------+-----------+-------------+----------+--------+------+------------+---------+ 1 row in set (0.00 sec) mysql>

Key points to note about indexes in database

- Indexes in database contains only sorted values, due to which searching a particular value in a field becomes faster

- Binary search algorithm is used to search from index which makes the searching even faster

- Database queries containing joins becomes much faster with indexing

- Disk Input Output operations can be reduced with the help of indexing in database

- If suppose there is a query that only retrieves data from an indexed field, the database server will only access index without accessing the complete data in the row.

- Indexing large number of fields in a table will make write operation slow

Sarath Pillai

Sarath Pillai Satish Tiwary

Satish Tiwary

Comments

Great!

Great!

Thank You Very much

Really helped enough to understand the basics

Great Article

Great Article

Awesome article...Very

Awesome article...Very knowledgeable...Good examples

Amazing Article

Really nice article to in depth basic knowledge from Disk to Index.Thanks for help

Easy to understand.

Nice article. You really have put all things at one place. Thanks!

indexing

great article, well explained.

Add new comment